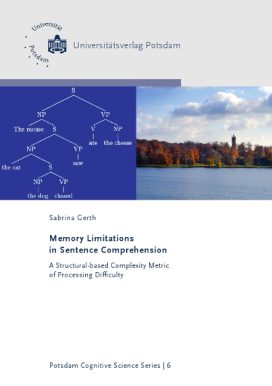

This dissertation addresses the question of how linguistic structures can be represented in working memory. We propose a memory-based computational model that derives offline and online complexity profiles in terms of a top-down parser for minimalist grammars (Stabler,2011). The complexity metric reflects the amount of time an item is stored in memory. The presented architecture links grammatical representations stored in memory directly to the cognitive behavior by deriving predictions about sentence processing difficulty.

Results from five different sentence comprehension experiments were used to evaluate the model”s assumptions about memory limitations. The predictions of the complexity metric were compared to the locality (integration and storage) cost metric of Dependency Locality Theory (Gibson,2000). Both metrics make comparable offline and online predictions for four of the five phenomena. The key difference between the two metrics is that the proposed complexity metric accounts for the structural complexity of intervening material. In contrast, DLT”s integration cost metric considers the number of discourse referents, not the syntactic structural complexity.

We conclude that the syntactic analysis plays a significant role in memory requirements of parsing. An incremental top-down parser based on a grammar formalism easily computes offline and online complexity profiles, which can be used to derive predictions about sentence processing difficulty.

a structural-based complexity metric of processing difficulty

ISBN: 978-3-86956-321-3

175 pages

Release year 2015

Series: Potsdam Cognitive Science Series , 6

8,50 €

Non-taxable transaction according to § 1 (1) UStG/VAT Act in combination with § 2 (3) UStG/VAT Act a. F. Providing this service, the University of Potsdam does not constitute a Betrieb gewerblicher Art/Commercial Institution according to § 1 (1) No. 6 or § 4 KStG/Corporate Tax Act. If the legal characterization of our business is changed to a commercial institution subsequently, we reserve the right to invoice VAT additionally. zzgl. Versandkosten

This dissertation addresses the question of how linguistic structures can be represented in working memory. We propose a memory-based computational model that derives offline and online complexity profiles in terms of a top-down parser for minimalist grammars (Stabler,2011). The complexity metric reflects the amount of time an item is stored in memory. The presented architecture links grammatical representations stored in memory directly to the cognitive behavior by deriving predictions about sentence processing difficulty.

Results from five different sentence comprehension experiments were used to evaluate the model”s assumptions about memory limitations. The predictions of the complexity metric were compared to the locality (integration and storage) cost metric of Dependency Locality Theory (Gibson,2000). Both metrics make comparable offline and online predictions for four of the five phenomena. The key difference between the two metrics is that the proposed complexity metric accounts for the structural complexity of intervening material. In contrast, DLT”s integration cost metric considers the number of discourse referents, not the syntactic structural complexity.

We conclude that the syntactic analysis plays a significant role in memory requirements of parsing. An incremental top-down parser based on a grammar formalism easily computes offline and online complexity profiles, which can be used to derive predictions about sentence processing difficulty.

Recommended Books

-

2024

2024Matthias Heinemann, Briana King, Tiago de Melo Cordeiro, Adrian Weiß, Luiz Fernando Ferreira Sá, Christine Walde, Orestis Karavas, Michael Julian Fischer, Francesca Cichetti, Andelko Mihanovic, Diego De Brasi, Alicia Matz, Sonsoles Costero-Quiroga, Marina Díaz Bourgeal, Emma Ljung, Thais Rocha Carvalho, Babette Pütz, Avishay Gerczuk, Ronald Blankenborg, Pietro Vesentin, Sonja Schreiner, Richard Seltzer, Katharina Wesselmann

thersites 19

Non-taxable transaction according to § 1 (1) UStG/VAT Act in combination with § 2 (3) UStG/VAT Act a. F. Providing this service, the University of Potsdam does not constitute a Betrieb gewerblicher Art/Commercial Institution according to § 1 (1) No. 6 or § 4 KStG/Corporate Tax Act. If the legal characterization of our business is changed to a commercial institution subsequently, we reserve the right to invoice VAT additionally.

Read more -

2016

2016Handbuch Textannotation

10,50 €Non-taxable transaction according to § 1 (1) UStG/VAT Act in combination with § 2 (3) UStG/VAT Act a. F. Providing this service, the University of Potsdam does not constitute a Betrieb gewerblicher Art/Commercial Institution according to § 1 (1) No. 6 or § 4 KStG/Corporate Tax Act. If the legal characterization of our business is changed to a commercial institution subsequently, we reserve the right to invoice VAT additionally.

zzgl. Versandkosten

Add to cart -

2013

2013Ästhetisches Verständnis und ästhetische Wertschätzung von Automobildesign

14,00 €Non-taxable transaction according to § 1 (1) UStG/VAT Act in combination with § 2 (3) UStG/VAT Act a. F. Providing this service, the University of Potsdam does not constitute a Betrieb gewerblicher Art/Commercial Institution according to § 1 (1) No. 6 or § 4 KStG/Corporate Tax Act. If the legal characterization of our business is changed to a commercial institution subsequently, we reserve the right to invoice VAT additionally.

zzgl. Versandkosten

Add to cart -

2012

2012Norbert P. Franz, Rüdiger Kunow

Kulturelle Mobilitätsforschung

48,00 €Non-taxable transaction according to § 1 (1) UStG/VAT Act in combination with § 2 (3) UStG/VAT Act a. F. Providing this service, the University of Potsdam does not constitute a Betrieb gewerblicher Art/Commercial Institution according to § 1 (1) No. 6 or § 4 KStG/Corporate Tax Act. If the legal characterization of our business is changed to a commercial institution subsequently, we reserve the right to invoice VAT additionally.

zzgl. Versandkosten

Add to cart

Contact

Potsdam University Library

University Press

Am Neuen Palais 10

14476 Potsdam

Germany

verlag@uni-potsdam.de

0331 977-2094

0331 977-2292